Effective Altruism After Sam Bankman-Fried

By Kieran Dearden

Effective Altruism (EA) is, effectively, the idea that if someone seeks to improve the world, it is rational to do so in the most efficient and effective ways possible. The methodology typically distills down to comparisons of units, outcomes, and costs, with effective altruists seeking the most cost- or work-efficient ways of increasing the relative well-being of individuals [1]. A straightforward example is the comparison between mosquito nets and chemotherapy: if it takes $3500 to prevent an individual from dying of malaria by supplying mosquito nets, and $8000 to prevent another individual from dying of cancer by administering chemotherapy, all other things being equal, the effective altruist will invest in mosquito nets and not chemotherapy.

The other major component of effective altruism entails the consideration of cost-efficiency over time [2], where one must weigh up the benefits of being altruistic now or hold off for greater gains in the future. For example, one could donate $10,000 per year for ten years to an effective charity, or invest that same amount into an index fund, wait ten years, and donate $110,000 instead (inflation notwithstanding). All else being equal, the effective altruist chooses to invest and wait, as the effectiveness of the same resources is greater if one saves instead of spends.

These values draw philanthropists like Sam Bankman-Fried, possibly the most (in)famous of its adherents, to effective altruism. Managing the cost-efficiency of a resource over time and jumping on effective investments when they arise is philanthropic bread and butter. The reader will not be surprised there are several considerations and concerns that arise within effective altruism. Many explain how Sam Bankman-Fried (henceforth SBF) maintained his status within the community of altruists despite being the least altruistic member of the group [3], and how his actions around the FTX fraud were in part justified by these considerations, despite being denounced by the Effective Altruism community after FTX filed for bankruptcy [4].

Commitments of Effective Altruism

William MacAskill, an Associate Professor at the University of Oxford and one of the key founders of the effective altruism movement, proposes effective altruism as being “the project of using evidence and reason to try to find out how to do the most good, and on this basis trying to do the most good” [1]. This outline is what organizations like GiveWell and Giving What We Can (both founded by prominent EA members) use to direct their efforts. By analyzing other charities and projects through evidence- and reason-based methods, such as randomized controlled trials and regression analyses [5], these organizations seek to establish a list of charities and projects doing the most good empirically. On this basis, EA members decide to try to do the most good, by investing in these select charities and projects.

Given that philanthropic donations to charities reached over $200 billion as of 2015 [6], and the stakes at play are human lives, it should be uncontroversial to employ evidence- and reason-based evaluations of these charities and their methods [5]. There are concerns about the limitations of these methods [7]. But the idea we should be efficient with physical resources, and the best way is through empirical means, is difficult to argue against. A failure to be efficient with resources directly results in fewer people being aided by these projects, and thus more people remaining blind, starving, or dying.

Breakdown of Effective Altruism

1. Effective Altruism is a Movement, not a Normative Theory

While Effective Altruism focuses on being altruistic and promoting the “most good”, it is not a normative theory: it does not prescribe actions, traits, or values that someone ought to follow. Instead, EA is a movement or project, with the aim of trying to do the most good through whatever actions are required, guided by any trait or value the person involved holds to be good. MacAskill himself considers Effective Altruism to be tentatively welfarist, as the good it aims to achieve is in the form of an increase in well-being for the people affected by EA’s actions and investments [1]. MacAskill does acknowledge that other conceptions of the good are compatible with EA. Yet non-welfarist views of the good are a small minority within the EA community [8] and are not prominent among EA leaders, making the movement predominantly welfarist, even if it does not specifically prescribe welfarist actions or values.

With this focus on welfare, effective outcomes, and the most good, effective altruism appears to be utilitarian in nature. Both the effective altruist and the utilitarian agree that saving two lives over one for the same resources would bring about the most good, not because of the virtuous or deontological motivations, but because the consequences of the actions lead to a better outcome. However, it is here where effective altruism’s status as a movement and not a normative moral theory becomes evident. MacAskill specifically states the movement as not being utilitarian [1], meaning that effective altruists are not committed to pursuing the greatest amount of good for the greatest number of people, but to providing the most effective aid they can for the resources they are willing to provide. The primary difference between these views is that, as a normative ethic, utilitarianism demands we ought to always seek the actions and outcomes that result in the greatest amount of good for the greatest number of people. The effective altruist is only committed to the common-sense view that if one were to donate, it is better to do so efficiently rather than inefficiently.

The most recent demographic survey found that the Effective Altruism movement is 76% white, less than 1% Black, 2% Hispanic, and 0% Indigenous, and 71% male.

This lack of normative pressure helps effective altruists avoid one of utilitarianism’s main criticisms: that of being unrealistically demanding of its adherents. Yet the lack of normative pressure also means there is no real motivation to donate to a charity for an EA member. If they do choose to donate, they need to simply remember it’s better to donate efficiently. As the Effective Altruism movement allows for multiple conceptions of the good, it cannot prescribe any charity or organization that one ought to donate to. The movement recommends of the organizations and charities pursuing some said goal, a particular organization that achieves the goal most efficiently. The decision and pressure to donate, to actually do the most good, remains with the individual members and their own values. (The most recent demographic survey found that the Effective Altruism movement is 76% white, less than 1% Black, 2% Hispanic, and 0% Indigenous, and 71% male.)

As the decision to donate lies with the individual, and as it may be considered efficient to let wealth accrue more wealth to donate more, Bankman-Fried’s comparative lack of altruistic donations does little to invalidate his membership of the movement. Before the FTX crash, SBF claimed he would eventually be able to donate at least 900 times what he had already given, which was at the time $25 million, or 0.1% of his fortune [3]. To put this into perspective, Giving What We Can, MacAskill’s meta-charity organization, whose members are encouraged to donate at least 10% of their income, raised a total of $1.5 billion from 3500 members [2]. Had SBF managed to keep his promise of a 900-fold increase, he alone would have donated $22.5 billion. In the face of such potential long-term generosity, SBF faced little criticism from the EA community for donating far less than he could have initially afforded. Had the $25 million he did donate go to ineffective charities or organizations, he would have faced at least some criticism, as there is still pressure within the group to donate effectively, if not necessarily to donate.

2. Longtermism and the Drowning Child

MacAskill raises several arguments when considering where and when to donate as an effective altruist [2], including a general reduction of cost-efficient solutions, expected cost-efficiency over time, social and financial investment returns, and whether we ought to value present generations over future ones. Using the relative strength of each factor, MacAskill constructs a framework for determining whether one ought to contribute now or later, given a set of inputs. However, he specifically states that valuing the interests of people alive today over the potential interests of potential people in the future would not be to treat all individual’s interests equally [2].

This notion stems from Peter Singer, a long-standing utilitarian and original advocator for the practice of earning to give [9], who served as the original inspiration for Effective Altruism, and still advocates for many of the movement’s short- and medium-term goals. He argued the relative distance between you and someone whose suffering you could alleviate ought not have moral weight. Your moral obligation to save a drowning child right in front of you is no stronger than the moral obligation to save a drowning child a house, block, or city away (provided you knew of them and could rescue them) [9]. Singer extends the argument to the alleviation of suffering of people in other countries, as instant communication and swift transportation eliminate many of the issues around the requirements of knowledge and the capacity to aid, and the jump in distance from city to country is not morally relevant. Thus, there is no moral reason to value the interests of the child drowning in front of you over the child drowning a country away, only practical issues. Similarly, MacAskill argues the distance in time between the child drowning in front of you today and the child drowning in front of you in a week’s time is not a moral consideration; claiming you would save one but not the other is to hold the interests of one individual over the interests of another, with the only justification stemming from a distance in time [2]. Thus, to spend resources now to save a single drowning child today, instead of conserving those resources for an opportunity to save two drowning children tomorrow, is to hold the interests of one individual over the interests of two future individuals. Were the two children to be drowning today instead of tomorrow, it would be morally irresponsible to save the first drowning child instead.

The equal consideration of individual interests across time leads to some very unintuitive conclusions. If we ought to value the interests of individuals regardless of how far in the future they are, then it follows that we ought to value the interests of a present individual the same as an individual half a million years in the future [10]. This view is known as ‘longtermism’, and, while it may seem absurd, MacAskill, Toby Ord, and SBF endorsed this longtermist view and its concerns [11]. This endorsement from prominent EA members in positions of power resulted in a significant deviation of the focus of the EA movement from a mixture of present and near-future donations and commitments to almost purely long-term future concerns [12]. Even after details of the FTX fraud case arose, causing a large portion of the EA movement to turn against SBF [10], longtermism’s validity was unharmed. Most of the blame fell on SBF’s deception [13] or how those involved entirely abandoned the principles of the Effective Altruism community [4], cordoning off the FTX case as an issue with SBF, not EA or longtermism.

These long-term concerns are mostly in the form of “x-risks” [12], or existential risks, the risk that some event will threaten the existence of humanity at some point in the future. As those holding a longtermist position value the interests of individuals in the future with the same moral weight as present individuals, any potential existential risk threatens the interests of not just those present during this event, but all those who come after. MacAskill proposes the Earth could host 10 quadrillion people over the next billion years [1], thus, were any event to threaten the extinction of humanity at any time during these billion years, such an event would threaten the well-being of 10 quadrillion people. With so many lives on the line, almost any investment towards averting any existential risk, no matter how low the probability of such an event occurring, is likely to be more cost-effective than any aid program dealing with present issues.

$1 billion spent for a 0.001% absolute reduction in existential risk would still, under his most conservative estimate, be 4,000 times more cost-efficient than bed-net distribution.

This conclusion logically leads to diverting funding from known, cost-effective aid programs like the Against Malaria Foundation (AMF) to initiatives targeting climate change, pandemic-preparedness, nuclear risks, and artificial intelligence [12]. One concrete example of this longtermist viewpoint is the $5.2 million donated by OpenPhil, a philanthropic organization formed by GiveWell and substantial funder of EA projects, to ESPR, a European organization that acts as a pipeline for teenagers to focus on AI safety [12,14,15]. For the same cost, nearly 1500 people could have been protected against malaria, instead of sending a bunch of teenagers on a summer camp with the hope that doing so will in some way avert extinction via artificial intelligence. However, this investment is justified by longtermism, as $5.2 million spent to save 10 quadrillion lives is thousands of times more cost-efficient than the $3500 for bed-nets could ever be. MacAskill is clearly aware of this tradeoff, stating that engaging in activities that slightly reduce the possibility of some existential risk may do more good than some activity that saves a thousand lives today [1].

Making an even more direct and striking comparison, MacAskill claims that $1 billion spent for a 0.001% absolute reduction in existential risk would still, under his most conservative estimate, be 4,000 times more cost-efficient than bed-net distribution [16]. Using these calculations, it becomes clear that nearly any investment of any size with any chance of reducing the risk of extinction by any degree is justified under longtermism. This inference has led to a variety of investments by EA-affiliated organizations, all seeking to reduce the possibility of x-risks by any means. These investments range from therapy and productivity support [17] to personal assistants and SAD lamps [18], all funded by the EA movement, and targeted specifically at those within the movement working on these problems.

Singer initially acknowledged x-risks as legitimate concerns and agreed that potential future generations are equally considerable to present generations [19]. Yet eight years later, he would warn against longtermism and existential risk shrinking the moral value of current issues to almost nothing, while providing a rationale for doing almost anything to avert long-term existential risks [20]. Singer likened this rationale to that of the Soviets and Nazis, who sought to change and control the long-term future of humanity, using the potential future gains to justify immoral acts in the present. He also indirectly likens himself to Marx, whose initial vision was used and twisted to justify a position that could never be contemplated in the vision’s original form [20]. Thus, while Singer is a prominent member of the EA movement, he is far from a supporter of the longtermist direction of the movement.

Despite Singer’s concerns, Sam Bankman-Fried, a noted endorser of longtermist ideas [11], along with MacAskill and four other EA leaders, established the FTX Future Fund, which leveraged profits from FTX to fund future-focused EA projects like Rethink and Nonlinear for at least $100 million to $1 billion. Had FTX not crashed six months after the founding of this fund, a great deal more money would have been dedicated to these longtermist causes under the same questionable justifications.

Conflict and Contradiction Within the EA Movement

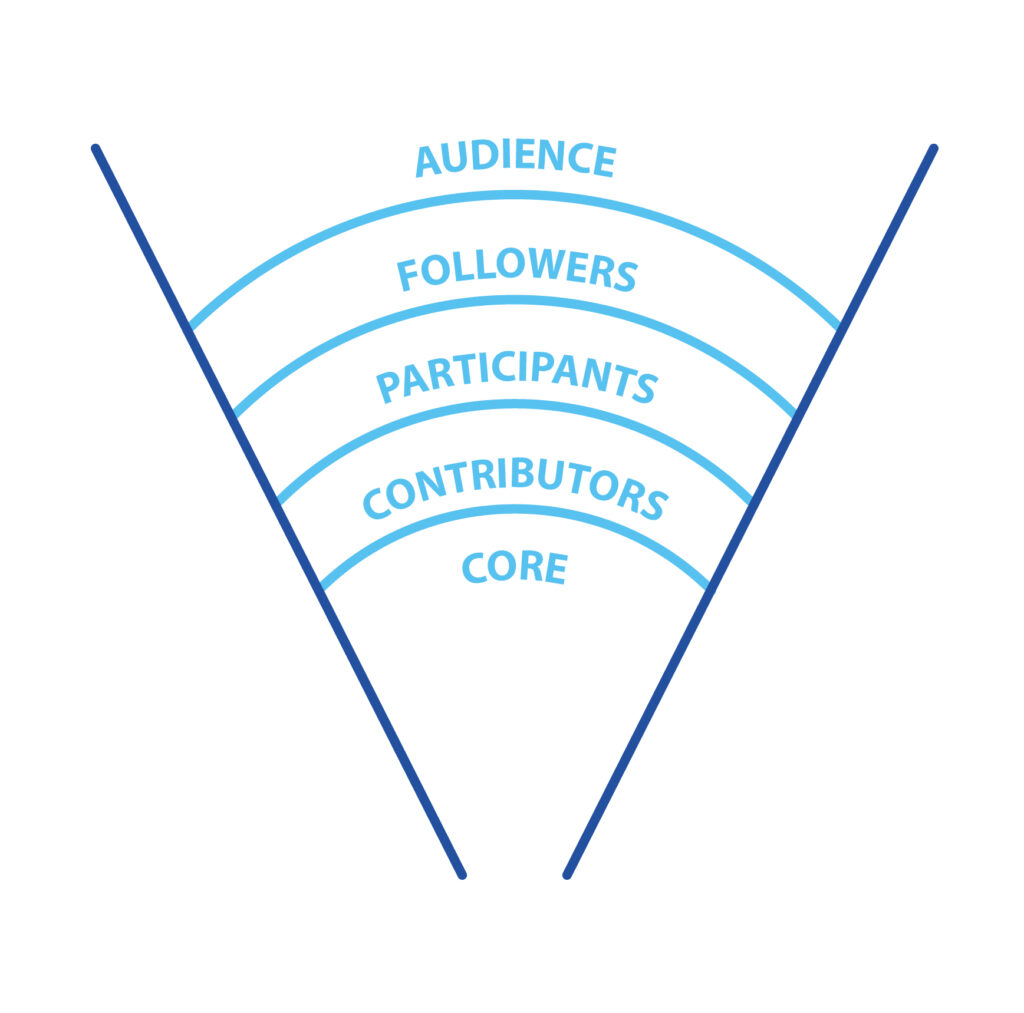

This apparent jump from near-term to long-term issues and the possible incompatibility between near-term, outward-facing projects like AMF and long-term, inward-facing projects like ESPR, makes it unclear what Effective Altruism is meant to be about. If EA was a normative moral theory, then this disparity would necessarily need to be resolved, either by concessions or by splitting the theory into two cogent forms. However, EA is a movement, with specific leaders, dozens of directly related organizations, and billions in potential and actualized funds [2]. The movement relies on attracting new members and investors. Notably, most new members are drawn to EA due to its near-term projects and apparent focus on effectiveness. In contrast, the leaders and major contributors are focused on long-term projects and existential concerns. This results in what the Centre for Effective Altruism themselves refer to as the funnel model [21] (figure 1).

In this model, the public-facing side of EA concentrates on the near-term, tangible projects like bed-nets and eye surgeries to increase membership numbers and available funding. Select members are then funneled down towards the core of the movement that focuses on the less tangible longtermist issues. [21]

Public-facing/Grassroots EA (Audience, Followers, Participants)

- Main focus is effective giving à la Peter Singer (GWWC, TLYCS, GiveWell)

- Main cause area is global health and development targeting the ‘distant poor’ in

developing countries (AMF, SCI, GiveDirectly) - Some focus on (mainstream) animal welfare (factory farming, promoting veg*nism)

- Some interest in (mainstream) x-risk/longtermism (climate change, pandemics,

nuclear risks) - Donors support organizations doing direct anti-poverty work (e.g. AMF, SCI,

GiveDirectly)

Core/Highly-Engaged EA (Contributors, Core)

- Main focus is x-risk/‘longtermism’ à la Nick Bostrom, Nick Beckstead, and Eliezer

Yudkowsky (FHI, GPI, 80K, MIRI, EA Foundation/CLR) - Main cause areas are x- risk, AI-safety, biotechnology, ‘global priorities research’,

and EA movement-building - More focus on wild animal suffering a la David Pearce, insect suffering, welfare of

digital minds (‘suffering subroutines’), in addition to mainstream animal welfare - Donors support highly-engaged EAs to build career capital, boost their

productivity, and/or start new EA organizations; research; policy-making/agenda-setting. [12]

This explicit division raises questions, both internally and externally, around how EA ought to operate, how it is structured, and what it truly stands for. This method of drawing people in with one palatable cause, then gradually shifting focus to a far more controversial cause is likened to bait-and-switch or indoctrination, and the knowledge and belief hierarchy likened to that of a conspiracy or church [22,23]. Others are concerned these inward-facing investments, like personal assistants, global retreats, and property investments, are no longer altruistic in nature, instead funding a cushy lifestyle for core EA members, and whether the movement should even be called Effective Altruism at all [24,25,26]. MacAskill condemned SBF for deceiving others following the FTX fraud case [4]. Yet the deliberate obfuscation of ‘core’ EA values for fear of driving away support certainly seems like deception on the part of EA.

While questionable whether some inward-focused longtermist investments are truly altruistic, they are, at least in theory, justified by possible lives saved. Critically, it is unclear just how effective the longtermist Effective Altruism projects truly are. One of the key public-facing draws to EA is its use of evidence and reason to determine the most effective donation opportunities [1], as opposed to making emotional- or political-driven donations. Yet both Holden Karnofsky (co-founder of GiveWell) and Ben Todd (co-founder of 80,000 Hours) have expressly stated they do not think the best way to be altruistic is necessarily through evidence and reason [27,28]. This stance directly contradicts the public-facing EA values but is necessary to justify a significant portion of longtermist funding. When considering the impacts of welfare projects targeting people half a million years in the future, evidence-based methodologies have next to nothing to work with. Philosophical reasoning allows longtermism to value these future individuals to the same degree as present individuals but cannot possibly evaluate the needs or capabilities of time-distant people beyond binary states of life and death. No reasonable amount of present evidence would be sufficient to establish the need or relative cost-efficiency of bed-nets at such a distant point in the future, for example. This means that, while EA can utilize evidence and reason to justify the effectiveness of specific present and near-term interventions, the only claims the movement can make for long-term welfare issues are those of sweeping generalities, i.e., that these people’s lives matter, and it would be altruistic to save them. The only identifiable threats to the welfare of future individuals at this timescale are those of mass extinction, as there are too many unknown factors to determine any narrower specifics. Thus, EA cannot rely on evidence-based methodologies like random controlled trials to evaluate the effectiveness of longtermist interventions but must instead rely on broad estimates of possible values, betting upon 0.001% chances and leveraging the potential existence of 10 quadrillion people to justify the hiring of personal assistants.

The Enemy Within Effective Altruism

While the public-facing values of Effective Altruism are appealing, and the near-term benefits of select EA projects are genuinely transformative (AMF have reportedly protected nearly half a billion people from malaria [29]), the core of EA is undeniably longtermist. The internal structure of the movement funnels individuals from these public-facing values to longtermist values, and in doing so, funnels funding from near-term benefits to long-term concerns. Due to the absurdity of the numbers involved in longtermism, almost any investment towards averting an existential risk by any degree is justified, leading to investments that are incompatible with EA’s public-facing values. SBF’s comparative lack of altruistic donations and his handling of FTX are similarly incompatible with EA’s public-facing values, but they are justifiable under a (consequentialist) longtermist view. The Effective Altruism movement may well provide immediate, tangible, and legitimate benefits to a variety of welfare projects, with GiveWell alone donating $127 million to non-longtermist charities [30]. The direction, future, and legitimacy of the movement becomes questionable the further it slides towards longtermism, or, more accurately, the greater the movements longtermist roots become exposed.

Appendix 1: Prominent Effective Altruism members/leaders:

- Peter Singer (Original inspiration for the EA movement, founder of The Life You Can Save)

- Holden Karnofsky (Co-founder of Givewell, CEO of Open Philanthropy)

- Elie Hassenfeld (Co-founder of Givewell)

- Toby Ord (Founder/Co-founder of Giving What We Can, Co-founder of the Centre for Effective Altruism)

- William MacAskill (Co-founder of Giving What We Can, 80,000 Hours, and the Centre for Effective Altruism)

- Nick Beckstead (Co-founder of the Centre for Effective Altruism)

- Michelle Hutchinson (Co-founder of the Centre for Effective Altruism)

- Benjamin Todd (Co-founder of 80,000 Hours)

- Dustin Moskovitz (Co-founder of Good Ventures and Open Philanthropy)

- Cari Tuna (Co-founder of Good Ventures and Open Philanthropy)

- Sam Bankman-Fried (Director of Development for the Centre for Effective Altruism, primary funder of the FTX Future Fund)

Works Cited:

- MacAskill, William, and Theron Pummer. “Effective Altruism.” The International Encyclopedia of Ethics, edited by Hugh LaFollette, 1st ed., Wiley, 2020, pp. 1–9. DOI.org (Crossref), https://doi.org/10.1002/9781444367072.wbiee883.

- MacAskill, William. “The Definition of Effective Altruism.” Effective Altruism: Philosophical Issues, edited by Hilary Greaves and Theron Pummer, Oxford University Press, 2019, pp. 3-17. https://doi.org/10.1093/oso/9780198841364.003.0001

- Erlich, Steven, and Peterson-Withorn, Chase. “Meet the World’s Richest 29-Year-Old: How Sam Bankman-Fried Made A Record Fortune In The Crypto Frenzy.” Forbes, Oct. 2021. https://www.forbes.com/sites/stevenehrlich/2021/10/06/the-richest-under-30-in-the-world-all-thanks-to-crypto/?sh=51883a5c3f4d.

- Szalai, Jennifer. “How Sam Bankman-Fried Put Effective Altruism on the Defensive.” New York Times, Dec. 2022. https://www.nytimes.com/2022/12/09/books/review/effective-altruism-sam-bankman-fried-crypto.html.

- Côté, Nicolas, and Bastian Steuwer. “Better Vaguely Right than Precisely Wrong in Effective Altruism: The Problem of Marginalism.” Economics and Philosophy, vol. 39, no. 1, Mar. 2023, pp. 152–69. DOI.org (Crossref), https://doi.org/10.1017/S0266267122000062.

- Singer, Peter. “The Most Good You Can Do: How Effective Altruism is Changing Ideas about Living Ethically.” Yale University Press, 2015. https://doi.org/10.12987/9780300182415

- Deaton, Angus, and Nancy Cartwright. Understanding and Misunderstanding Randomized Controlled Trials. National Bureau of Economic Research, Sep. 2016. http://www.nber.org/papers/w22595.

- Berkey, Brian. “The Philosophical Core of Effective Altruism.” Journal of Social Philosophy, vol. 52, no. 1, Mar. 2021, pp. 92–113. DOI.org (Crossref), https://doi.org/10.1111/josp.12347.

- Singer, Peter. “Famine, Affluence, and Morality.” Philosophy & Public Affairs, vol. 1, no. 3, 1972, pp. 229-243. JSTOR, http://www.jstor.org/stable/2265052.

- Englehardt, Elaine E. and Philosophy Documentation Center. “The Duel between Effective Altruism and Greed.” Teaching Ethics, vol. 23, no. 1, 2023, pp. 1–14. DOI.org (Crossref), https://doi.org/10.5840/tej2023823135.

- Lewis-Kraus, Gideon. “The Reluctant Prophet of Effective Altruism.” The New Yorker, Aug. 2022. https://www.newyorker.com/magazine/2022/08/15/the-reluctant-prophet-of-effective-altruism

- Gleiberman, Mollie. “Effective Altruism and the Strategic Ambiguity of ‘Doing Good’.” University of Antwerp, Apr. 2023. https://medialibrary.uantwerpen.be/files/8518/0e580e13-0ea2-4c3f-bb2e-1b8e27c93628.pdf.

- Beckstead, Nick, et al. “The FTX Future Fund team has resigned.” Nov. 2022. https://forum.effectivealtruism.org/posts/xafpj3on76uRDoBja/the-ftx-future-fund-team-has-resigned-1. Accessed 16/2/2024.

- Open Philanthropy. “Grant – European Summer Program on Rationality (ESPR), General Support ($510,000).” Jul. 2019. https://www.openphilanthropy.org/grants/european-summer-program-on-rationality-general-support/.

- Open Philanthropy. “Grant – European Summer Program on Rationality (ESPR), General Support ($4,715,000).” May 2022. https://www.openphilanthropy.org/grants/european-summer-program-on-rationality-general-support-2022/.

- Greaves, Hilary, and William MacAskill. “The Case for Strong Longtermism.” Global Priorities Institute, Jun. 2021. https://globalprioritiesinstitute.org/wp-content/uploads/The-Case-for-Strong-Longtermism-GPI-Working-Paper-June-2021-2-2.pdf

- Rethink Wellbeing. “Rethink Wellbeing – Home.” 2023. https://www.rethinkwellbeing.org/. Accessed 6/2/2024.

- Nonlinear. “The Productivity Fund: A low-barrier fund paying for productivity enhancing tools for longtermists.” 2022. https://web.archive.org/web/20220803123336/https://www.nonlinear.org/productivity-fund.html. Accessed 6/2/2024.

- Singer, Peter, et al. “Preventing Human Extinction.” EA Forum, Aug. 2013. https://forum.effectivealtruism.org/posts/tXoE6wrEQv7GoDivb/preventing-human-extinction. Accessed 20/2/2024.

- Singer, Peter. “The Hinge of History.” Project Syndicate, Oct. 2021. https://www.project-syndicate.org/commentary/ethical-implications-of-focusing-on-extinction-risk-by-peter-singer-2021-10. Accessed 20/2/2024.

- Centre for Effective Altruism. “The Funnel Model”. 2018. https://www.centreforeffectivealtruism.org/the-funnel-model. Accessed 6/2/2024.

- Hilton, Sam. Comment on: What will 80,000 provides and not provide within the EA community. EA forum, Apr. 2020. https://forum.effectivealtruism.org/posts/dC8w35Y9G7gyWhNHK/what-will-80-000-hours-provide-and-not-provide-within-the?commentId=Nx5H6picuMFtvpxCe. Accessed 20/2/2024.

- Kulveit, Jan. Comment on: Max Dalton: I’m pleased to announce the second edition of the effective altruism handbook. May 2018. https://web.archive.org/web/20200929190922/https://www.facebook.com/groups/effective.altruists/permalink/1750780338311649/?comment_id=1752865068103176. Accessed 20/2/2024.

- Beardsell, Paul. Comment on: Free-spending EA might be a prig problem for optics and epistemics. EA Forum, Apr. 2022. https://forum.effectivealtruism.org/posts/HWaH8tNdsgEwNZu8B/free-spending-eamight-be-a-big-problem-for-optics-and?commentId=FtdYrpmLfaEdF5ekx. Accessed 20/2/2024.

- Rosenfeld, George. “Free-spending EA might be a big problem for optics and epistemics.” EA Forum, Apr. 2022. https://forum.effectivealtruism.org/posts/HWaH8tNdsgEwNZu8B/. Accessed 20/2/2024.

- ParthThaya. “Effective Altruism is no longer the right name for the movement”. EA Forum, Aug. 2022. https://web.archive.org/web/20220911050439/https://forum.effectivealtruism.org/posts/2FB8tK9da89qksZ9E/effective-altruism-is-no-longer-the-right-name-for-the-1. Accessed 20/2/2024.

- Givewell. “Clear Fund Board Meeting – January 24, 2013” 2013. https://www.givewell.org/about/official-records/board-meeting-21. Accessed 10/2/2024.

- Koehler, Arden, et al. “Benjamin Todd on the core of effective altruism and how to argue for it.” Sep. 2020. https://80000hours.org/podcast/episodes/ben-todd-on-the-core-of-effective-altruism/. Accessed 10/2/2024.

- Against Malaria Foundation. “2021 Round up and update” Dec. 2021. https://www.againstmalaria.com/NewsItem.aspx?newsitem=2021-Round-up-and-update-Thank-you-for-your-support. Accessed 10/02/2024.

- Givewell. “GiveWell Metrics Report – 2019 Annual Review”. 2019. https://files.givewell.org/files/metrics/GiveWell_Metrics_Report_2019.pdf. Accessed 10/2/2024.

Photo courtesy of HeatherPaque